World Wide Web. Imagine a world without instant access to information. No online banking, no video calls, no streaming movies, and no social media to connect with friends and family across the globe.

This was the reality just a few decades ago, before the invention of the World Wide Web (WWW). The Web didn’t just add a new layer to our lives; it fundamentally reshaped almost every aspect, from how we learn and work to how we relax and entertain ourselves.

Since its humble beginnings, the Web has grown to an almost unimaginable scale. In April 1993, as the Web was just starting to gain traction, there were only 623 websites.

Today, that number has skyrocketed to over 1.1 billion websites, with an estimated 5 billion people using the Internet. But how did this revolutionary technology come to be?

The story of the World Wide Web begins in the late 1980s at CERN, the European Organization for Nuclear Research. Thousands of scientists from over 100 countries were collaborating on complex projects, and there was a desperate need for a system that would allow them to share information quickly and efficiently.

World Wide Web in World.

At the time, an English computer scientist named Tim Berners-Lee was working at CERN on a project to develop an internal network. He, along with his colleague Robert Cailliau, is widely credited as the co-inventor of the World Wide Web.

In 1989, Berners-Lee proposed a project for a global hypertext system, which could be accessed from anywhere. This system of linked information pages, or “web pages,” would become the foundational pillar of the future Web.

The technology was initially released to other research institutes in January 1991 and then made available to the wider public on August 23, 1991. The Web was a success at CERN and began to spread to other scientific and academic institutions.

Over the next two years, 50 websites were created a far cry from the billions we have today.

From Mosaic to the Dot-Com Bubble.

A pivotal moment in the Web’s history came in 1993 when CERN made the WWW protocol and code freely available to the public, paving the way for its widespread adoption.

Later that same year, the National Center for Supercomputing Applications (NCSA) released the Mosaic web browser.

The arrival of Mosaic, a graphical browser that could display embedded images and process forms, ignited a massive surge in the Web’s popularity, with thousands of sites appearing in less than a year.

The following year, Mosaic’s co-creator, Marc Andreessen, and Jim Clark founded Netscape and released the Navigator browser, which introduced groundbreaking technologies like Java and JavaScript to the Web. Netscape Navigator quickly became the dominant browser.

When Netscape went public in 1995, it created a massive stir in the tech world, leading to the infamous dot-com bubble. In response, Microsoft developed its own browser, Internet Explorer, sparking the “browser wars.”

By bundling Internet Explorer with its Windows operating system, Microsoft eventually won a significant share of the market.

It’s interesting to note that the Internet as an information and communication network had existed long before this, but it only became a truly mass phenomenon with the invention of the Web and, of course, the user-friendly browsers that made it accessible to everyone.

To make the WWW project a reality, Berners-Lee and his colleagues developed the URI, the HTTP protocol, and the HTML language.

Without these key components, the Web would likely not have achieved such widespread use. Berners-Lee also created the world’s first web server and the very first website, which went live on August 6, 1991, at http://info.cern.ch/.

This resource contained information about how to install and use a web server and browser, and it later served as a directory of links to other websites.

While there are over 1.1 billion websites today, a much smaller number around 200 million are considered active. In the 1990s, websites primarily consisted of text and static images. Internet access was slow, so animations and interactivity were very limited.

By the turn of the millennium, 44% of North Americans were using the Internet. It’s only in the last two decades that the Web has become truly global, with user numbers dramatically increasing in every region, including Latin America, the Middle East, and North Africa.

The Era of Google and Social Media.

The rise of the Web coincided with the ubiquity of email and a new wave of entrepreneurship. Companies began to leverage e-commerce, leading to an explosion in the number of websites in the late 1990s.

Even before Google, users could find information on sites like IMDb, which started as a fan-run database in 1990. In 1998, users could search for books and read reviews on Amazon’s text-heavy homepage.

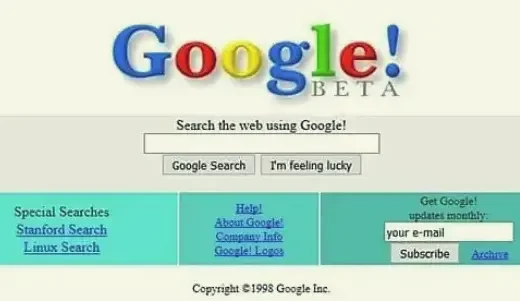

But a seismic shift occurred with the launch of the Google search engine in 1998, which was started as a research project by two students, Sergey Brin and Larry Page.

By the end of that year, Google had indexed an impressive 60 million pages. It has since grown to become the world’s most popular search engine, a true titan of the Internet.

In the early 2000s, another major transition began with the emergence of the first social networks. Users started to shift from being passive consumers of content to active creators.

This transition is often described as the move from Web 1.0 to Web 2.0, a shift that began around 2004 and continues to this day.

A comparison of the most-visited websites in 1993 versus 2023 clearly shows this change, with social media sites dominating the current list of leaders.

For example, a source notes that Facebook users send 510,000 comments, 293,000 status updates, and 136,000 photos every single minute.

For people under the age of 30, the ability to access information instantly is a given. They’ve grown up with an open-access world, developing skills, habits, and a vocabulary that simply didn’t exist before the advent of the World Wide Web.

The First Computer.

The ENIAC.

Before the sleek laptops and powerful smartphones of today, the world’s first electronic general-purpose computer was a behemoth. We’re talking about the ENIAC, or Electronic Numerical Integrator and Computer. Its existence was revealed to the public in February 1946.

This monstrous machine weighed 27 tons and, if built today, would cost an estimated $7.2 million. The ENIAC was initially designed for military purposes, which came as no surprise given the era.

The army needed it to quickly calculate the trajectories of ballistic missiles and other projectiles. The process of configuring the computer for different tasks, however, looked very different from modern-day programming.

Programmers would physically walk around the room, switching wires and turning control knobs. Their work was more of a series of mechanical actions than the abstract coding we know today.

Development of the ENIAC began in 1942 at the Moore School of Electrical Engineering in Pennsylvania.

At the time, human operators manually calculated the flight trajectories of artillery shells. They had to account for a staggering number of variables, including the gun’s position, wind speed, air temperature, and the projectile’s velocity.

The military needed to know the flight trajectories for approximately 3,000 different shells, and each trajectory required about 1,000 separate calculations. A human operator could take up to 16 days to complete just one of these trajectories.

The results of these calculations were compiled into “firing tables” that allowed military personnel to accurately hit enemy targets.

The ENIAC was designed to automate this process of creating firing tables. While it was less powerful than a modern calculator, its capabilities were astounding for its time. It could perform 357 multiplications or 5,000 operations per second.

Its technical execution was incredibly complex, and the computer frequently failed. Initially, it would break down twice a day, but the developers later installed more reliable components, reducing the number of failures to once every two days.

Unlike modern computers, the ENIAC didn’t have software programs that could be installed. To perform a different task, it had to be reconfigured manually.

Operators had to plug and unplug hundreds of wires into different connectors and turn countless knobs.

At the time, this work didn’t even have an official name, but in reality, the ENIAC operators were the world’s first programmers. Programming the ENIAC was a process that could take weeks and was a world away from the efficient coding of today.

The ENIAC project was eventually retired in 1955, as more powerful computers began to emerge.

However, this pioneering machine is still considered a modern computer for its time because it could be reconfigured to solve a wide variety of problems.

The New Frontier.

Programming for Kids.

In today’s world, the IT field is one of the most promising and in-demand areas for both education and careers. Even a minimal ability to code is a huge advantage when applying for jobs, and it’s valued almost as highly as knowing a foreign language.

This is why more and more parents are choosing to enroll their children in courses related to programming. But how useful are these classes, and how can you tell if the IT field is the right fit for your child?

First and foremost, it’s crucial to identify a child’s genuine interest. While anyone can learn to program, it’s far more effective when it’s something that truly excites them.

Pay attention to whether your child is fascinated by technology, computers, and even video games.

If they spend a lot of time on the Internet and show curiosity about how websites are built, then IT could be a perfect fit. It’s worth noting, however, that an interest in video games doesn’t always translate into a love for programming.

The interest often starts and ends with playing the games themselves.

Programming requires a special kind of mindset. It demands logical thinking and problem-solving skills. Writing code is a meticulous and patient process that requires both persistence and perseverance.

The best age to start programming courses is often considered to be around 10 years old, although many courses are designed for even younger children.

These programs often use specialized, adapted programming languages that allow kids to learn how to create simple games and applications.

When choosing a school, it’s important to find the right fit for your child’s age and skill level. If the program is too difficult or boring, they will quickly lose interest.

Here are a few types of courses to look for:

• Introductory Programs (Ages 6–14): These courses teach children basic computer and Internet literacy, and how to use applications and programs.

• Computer Graphics Courses (Ages 6–10): Perfect for creative children, these courses teach them to create their favorite cartoon and video game characters or even invent their own. They also learn how to create special effects and transform drawings.

• Scratch (Ages 6–10): Ideal for kids interested in animation and games. This course teaches them how to create simple cartoons and video games using a visual, block-based programming language.

• Python (Ages 12–15): A more advanced course that introduces the Python programming language and teaches kids how to build games and applications using a widely-used and versatile text-based language.

• Full-Stack Web Development (Ages 14–17): For teens who already have some programming experience, these courses teach them how to build websites and applications from scratch.

They provide a foundational understanding of key programming languages like Python, JavaScript, Django, HTML, and CSS.

In the world of IT, proficiency in English and mathematics is incredibly important. The sooner a child starts to develop these skills, the easier it will be for them to master programming languages and code.

Learning to program offers significant benefits that extend beyond a future career. It helps children develop a variety of useful skills:

• Logic and Mathematical Thinking: The process of solving problems and building algorithms teaches children to think logically, a skill that’s valuable in all aspects of life.

• Creativity: Surprisingly, programming is closely tied to creativity. The process of creating games and animations helps children develop their imagination and learn to think outside the box.

• Language Skills: The vast majority of programming terms and code are in English. This exposure helps children become more comfortable with the language.

• Perseverance and Patience: Creating even a simple game is a meticulous and lengthy process that requires patience and focus.

Specialized schools and training centers focused on IT education are the best places for children to acquire these skills. They provide a structured environment with instructors who can guide and motivate young learners.

I hope you have a great day!